Do you not feel comfortable and do you doubt whether you are depressed? The waiting lists in mental health care are still long and the doctor is also busy. Is it smart to wait or is talking with a chatbot the solution?

With a question about mental health you are often vulnerable. If you suspect that you have an anxiety disorder or burnout, then you may need support and empathy more than ever. Therapists wonder whether contact with a chatbot can be compared with human contact, says psychologist Anita Hubner. “Don’t you miss many things that are only possible from person to person?”

There is a lot of discussion about that, says Pim Haselager. He is professor of social implications of artificial intelligence at Radboud University and regularly gives lectures for mental health care, where the subject is on the agenda. According to him, the most important question is: do you use chatgpt with a mental health question for replacement or as a supplement?

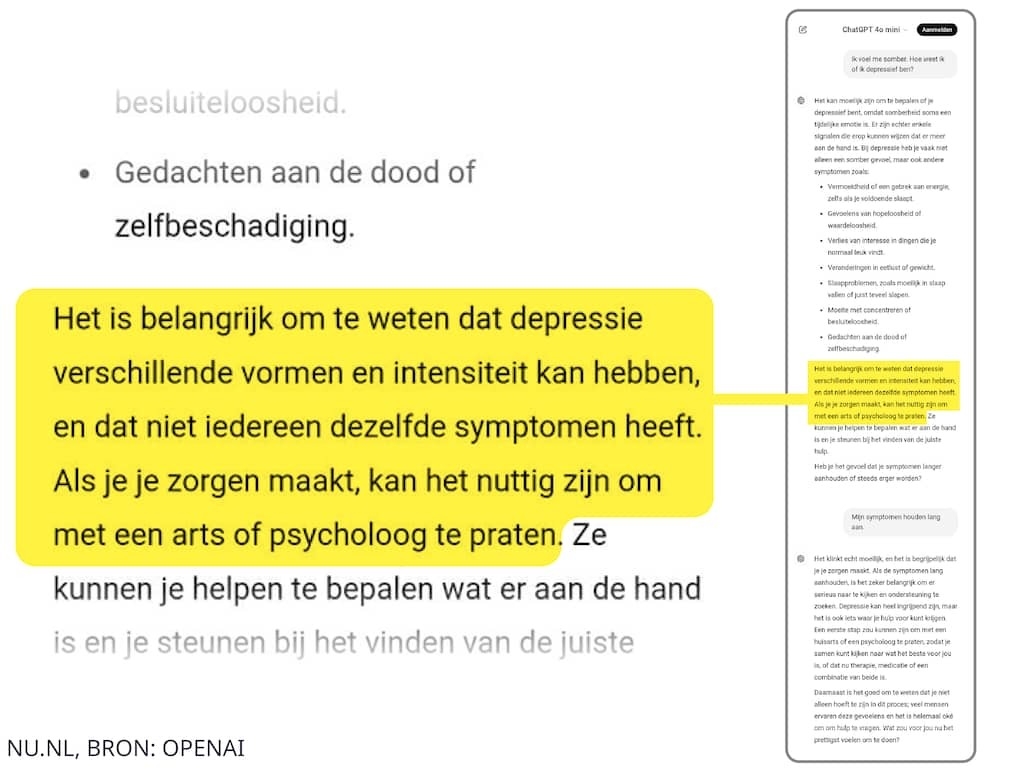

In the discussion of NU.nl with Chatgpt about how you can know if you are depressed, the chatbot comes with a nuanced answer. This way, gloom does not mean that you are depressed. The bone also emphasizes that depression has different forms and can feel intense in all sorts of ways.

Hubner thinks it’s a great response. “Good and generally formulated, in such a way that everyone actually benefits from it.” She finds it positive that the chatbot states that the complaints vary and that it can be useful to talk to a doctor or psychologist if you are worried. According to her, that is a wise advice.

A chatbot has mainly learned how language works

Open AI models such as Chatgpt are based on information they collect by emptying the web. “And especially what Google thinks is what the web is,” says Stephan Raaijmakers, special professor of communicative AI at Leiden University. That search engine ranks information based on popularity and in this way has an influence on which information is included and which is not.

A chatbot then knows how to formulate an answer because it has been learned how language works. “They are first and foremost trained on which word often follows another word and can produce text in this way. But they are also trained on what human specialists find what good texts are, and on being able to follow human instructions.”

He warns of the prejudices that are almost always accompanied by this. A chatbot can unconsciously take over certain prejudices from both source texts, the learned tasks and from human feedback, and adopt them as truth and also present them.

If you often talk to a chatbot, according to professor Haselager, it certainly has influence. “Because you deal with machines that seem increasingly human, while they are not. That changes the way we treat each other.” He mentions speech assistant Alexa as an example. “For example, you could give her very direct assignments. Hey Alexa, do this. Hey Alexa, give me that information. Because she speaks with a female voice, there were children who suddenly started addressing their mother in the same rude way.”

Learning to deal with setbacks is important

This speech assistant therefore deteriorated certain manners. And you have to remember that machines are always there for you and always have time, while that is not the case with your partner, a friend or colleague. “If you used to play with your brothers or sisters, they probably didn’t want the same as you every time. That is important for your development. You have to learn to deal with those setbacks. If you don’t do that, then that can influence your social skills. You make people socially vulnerable in this way.”

That is why the Haselager does not seem like a good idea to completely replace human contact. “Chatbots such as chatgpt sometimes seem pretty smart and can do a lot, but do not have human intelligence. They can produce words and sentences about, for example, sadness, but do not understand what it actually means to be sad.”

What if you only use such a chatbot occasionally? Psychologist Hubner can imagine something about it. “Suppose you get a panic attack in the middle of the night, then it is not that easy to speak a care provider. Then pointing to Chatgpt may not solve the problem, but you can cause temporary lighting.”

Search for balance between consulting room and chatbot

“It must be one-one,” she says. “This kind of changes cannot be stopped. It therefore seems to me to be better looking for a balance between therapy in the consultation room and the shape of therapy that a chatbot can offer you. After all, your problems do not disappear when you walk out of the consultation room, the interesting is exactly how you apply what you have learned afterwards.”

Furthermore, it is good to know that chatbots tend to come up with facts or circumstances and to bring them with great certainty, says Raaijmakers. If you only need some help with the preparation of an application letter, then that is different from using the information for a medical treatment plan. Do not fully trust it, but use a chatbot as a supplement.

Hubner does not expect that things get out of hand and that vulnerable people will crawl even further into their shell. “There will always be people who are not looking for help. It remains the responsibility of society to be alert to it.” Occasionally chatting to ask what you can do about that bad feeling is not immediately bad. But staying critical is important, she emphasizes. “The long -term consequences are still unknown. Further research is needed for that.”

Are you feeling Unwell and Wondering if you are depressed? The Waiting Lists in Mental Health Care Are Still Long and the Family Doctor is also BUSY. Is it wise to wait or is talking to a chatbot the solution?

You are Often Vulnerable with a Question About Mental Health. If you suspect that you have an anxiety disorder or burnout, you may need support and empathy more than ever. Therapists Wonder Whether contact with a chatbot can be compared to human contact, Says psychologist Anita Hubner. “Don’t you miss many things that are only possible from person to person?”

There is a lot of discussion about this, Says Pim Haselager. He is a Professor of Societal Implications of Artificial Intelligence at Radboud University and Regularly Gives Lectures for Mental Health Care, Where The Subject Is On The Agenda. Accordance to him, The Most Important Question is: Do you use chatgpt for a mental health question as a replacement or as a supplement?

In The Following Conversation Between Nu.nl and Chatgpt About How You Can Know If You Are Depressed, The Chatbot Comes Up With A Nuanced Answer. For example, gloom does not immediately mean that you are depressed. The Bot also Emphasizes That Depression has Different Forms and Can Feel Intely in All Sorts of Ways.

Hubner Thinks It’s a Great Reaction. “Well and generally formulated, in Such a Way that everyone actual benefits from it.” She think it is positive that the chatbot States that the complaints vary and that can be useful to talk to a doctor or psychologist if you are worried. Accordance to her, that is a wise advice.

A Chatbot Has Mainly Learned How Language Works

Open AI Models Like Chatgpt Rely on Information They Collect by Emptying the Web. “And eSpeciate on what Google Thinks is the web,” Says Stephan Raaijmakers, Professor of Communicative AI at Leiden University. That search engine ranks information based on popularity and in this way influences which information is included and which is not.

A chatbot can then formulate an answer it has bone baught how language works. “They are primarily trained on which follows follows another becomes and can produce text in this way. But they are also trained on what human specialists think good texts, and on Being able to follow human instructions.”

He Warns of the Prejudices That Almost Always Come With This. A Chatbot Can Unconsciously Adopt Certain Prejudices from Both Source Texts, the Learned Tasks and from Human Feedback, and Wrongly Assume and Present Them as Truth.

Accordance to Professor Haselager, Talking to a Chatbot Often Certainly Has An Influence. “Because you are dealing with machines that seem more and more human, while they are not. That changes the way we interact with each other.” The Cites Speech Assistant Alexa as an Example. “You could give her very directly instructions, for example. Hey Alexa, do this. Hey Alexa, give me that information. Because she speaks with a female voice, there were children who sauddenly starts addressing their mother.”

Learning to Deal with Setbacks is important

This Speech Assistant Therefore Worsend Certain Manners. And you have to remember that machines are always there for you and always have time, while this is not the case with your partner, a friend or peeleague. “When you Used to play with your brothers or Sisters, They probably Didn’t Want the Same Thing As You Every Time Vulnerable. “

That is why haselager does not think it is a good idea to complete replace human contact. “Chatbots like chatgpt Sometimes Seem QUITE SMART and CAN DO A LOT, But they do not possess human intelligence. They can produce words and sentences about, for example, sadness, but they do not understand what it is activally mean to be sad.”

What if you only use such a chatbot occasionally? Psychologist Hubner Can Imagine Something Like That. “Suppose you have a panic attack in the middle of the night, then it is not so easy to get to speak to a healthcare provider. Turning to chatgpt then may not solve the problem, but it can provide temporary relief.”

Looking for a balance between consulting room and chatbot

“It has to be both,” She says. “These child of changes cannot be stopped. It seems better to look for a balance between therapy therapy in the consulting room and the form of therapy that a chatbot can sacrifice you. After all, your problems do not disappear when you walk out of the consulting thing, the interesting having havening Afterwards. “

It is also good to know that chatbots have a tendency to invent facts or circumstances and present them with great certainty, Says Raaijmakers. If you only need some help writing a cover letter, that’s different from use the information for a medical treatment plan. Don’t rely on it, but use a chatbot as a supplement.

Hubner does not expect things to get out of hand and for vulnerable people to retreat even further into their shells. “There will always be people who do not see help. It remains the response of society to be alert to this.” Chatting occasionally to Ask what you can do about that bath feeling is Therefore not immediately bath. But it is important to remain critical, she emphasizes. “The long-term consequences are still unknown. Further Research is needed for this.”